24 Apr The Technology Behind “Call the Play”

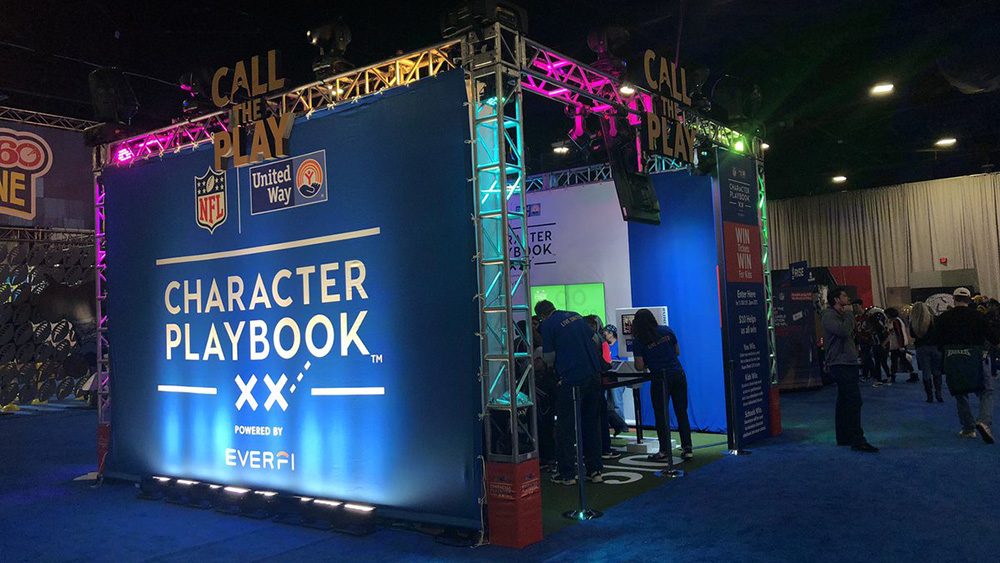

I had the pleasure to serve as Project Manager alongside Creative Director, Jason Drakeford, for an exciting project at Futurus this past January. Together with United Way of Greater Atlanta and the NFL, we produced “Call the Play.” It is a VR adaptation of Character Playbook, a Social and Emotional Learning (SEL) program created by United Way and the NFL to immerse middle-school-aged students in tough, complex everyday situations and offering guidance on how to navigate their conversations, manage emotions, and communicate effectively. The experience offers support of their decisions, helping them gain the confidence to stand up for their peers and control their emotions when having tough conversations with friends or adults.

“Call the Play” debuted at the Super Bowl Experience the week leading up to Super Bowl LIII in Atlanta, Georgia, where thousands of children and adults stopped by the booth and engaged with the game.

My team and I were fortunate to work with NFL Hall-of-Famer Jerry Rice in a state-of-the-art volumetric capture studio at the Creative Media Industries Institute at Georgia State University. Capturing his full-body performance offered a realistic 3D model of him.

Demario Davis of the New Orleans Saints shared a true, personal story of how he stood up to a classmate’s bullies. Lorenzo Alexander of the Buffalo Bills guides players through a situation where you overhear classmates talking behind your friend’s back. Davis and Alexander were filmed in 2D video.

Once a young person puts on the HTC Vive Pro headset, they’re transported to the middle of the Mercedes-Benz Stadium, the venue for Super Bowl LIII where they are greeted by Jerry Rice. Portals appear and the user then chooses to hear a story from Lorenzo Alexander of the Buffalo Bills or Demario Davis of the New Orleans Saints. The NFL players set the stage and describe a situation where they witnessed someone getting bullied or talked about behind their back. The users are asked how they would react in the situation. The scene plays out based on the selection and the NFL players explain the consequences of the choice. The student is transported back to the Mercedes-Benz Stadium where Jerry Rice congratulates them for making the call. The experience wraps up with celebratory fireworks.

Using Unity 3D as the game engine in combination with volumetric capture technology, motion capture, 3D character, environmental and modeling design, animation, 2D video and scripting, the platforms necessary to build “Call the Play” makes it truly unique, innovative, and interesting for users to play.

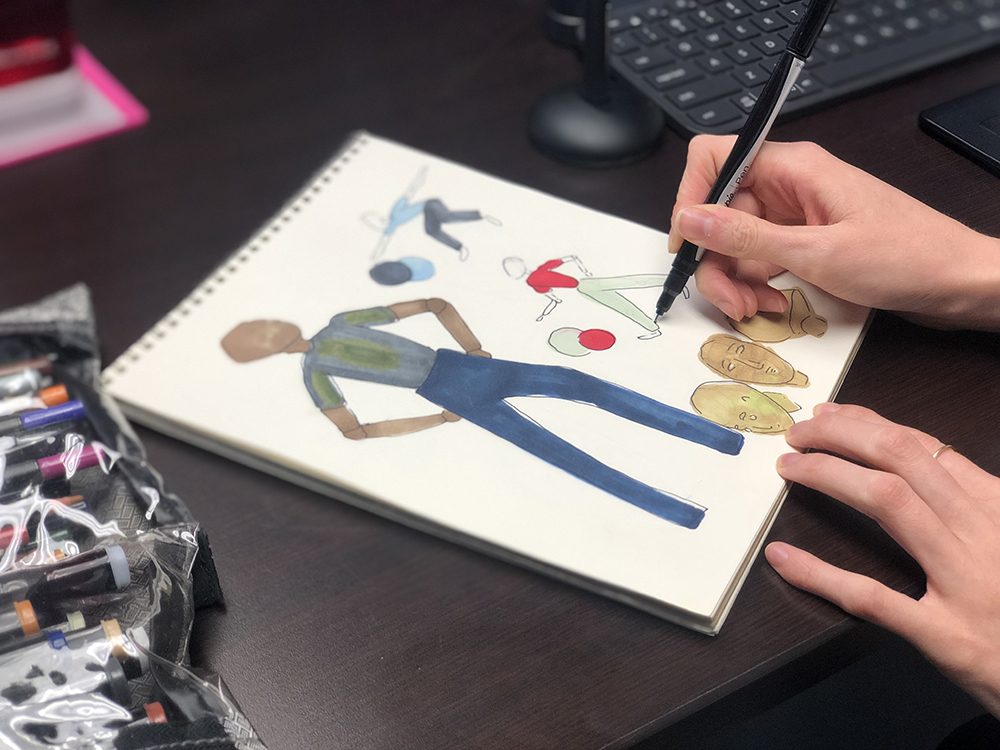

Shelby Vecchio, Art Director, describes her process for designing the 3D models and the environment –

The environment and character design process began with brainstorming multiple visual themes. Brainstorming involved sketching environment and character concepts in order to define the color scheme, character design, and overall tone of the world. Experimenting with the color scheme and 3D modeling in Unity and Cinema 4D aided in defining the project’s visual assets. From the storyboard, each role for the characters was established as well as their placement within the scene. Once the thematic elements of the world were established, the development of the environment and characters for the scenes began. There are three scenes in total (classroom, neighborhood, and stadium). In order to develop the environments, the color scheme had to be integrated and props had to be sourced to fulfill the defined look. 3D modeling and UV mapping were completed in Maya, Cinema 4D, and Unity. If there was an interaction with environments or objects based on color, the color scheme was manipulated programmatically in Unity.

The environment and character design process began with brainstorming multiple visual themes. Brainstorming involved sketching environment and character concepts in order to define the color scheme, character design, and overall tone of the world. Experimenting with the color scheme and 3D modeling in Unity and Cinema 4D aided in defining the project’s visual assets. From the storyboard, each role for the characters was established as well as their placement within the scene. Once the thematic elements of the world were established, the development of the environment and characters for the scenes began. There are three scenes in total (classroom, neighborhood, and stadium). In order to develop the environments, the color scheme had to be integrated and props had to be sourced to fulfill the defined look. 3D modeling and UV mapping were completed in Maya, Cinema 4D, and Unity. If there was an interaction with environments or objects based on color, the color scheme was manipulated programmatically in Unity.

Using the sketches from the brainstorming sessions, the character design was created. The character design began in Fuse using the female and male body meshes as templates. From these templates, 22 characters, including 20 kids and 2 adults, were sculpted. We made a point to create a diverse group of characters in order to reflect actual school environments they could be representing. Once the templates were created with 22 character iterations, some were brought into Cinema 4D to UV map unique textures and for further customization. Once the modeling and UV mapping were complete, they were exported into Mixamo where the characters were rigged in order to be animated. The characters were then exported to Unity. In Unity, each character was given a cartoon shader to appear as if they belonged in a graphic novel. The shader completed the look of the characters, which meant they were ready for animation in Unity.

Pierce McBride, Experience Developer, shares his process of creating a character and environmental aesthetic from scratch when he couldn’t find one that he was happy with –

When we went about designing the environments, we started by looking at the places where these two NFL players grew up. Demario is from Mississippi, for example, and his story took place on a neighborhood street. We used photos from neighborhoods in his hometown to try to capture the kinds of homes and cars that we placed in his story. These reference photos also gave us ideas for elements we may not have thought of otherwise, like the church in the background of the neighborhood or the size of school hallways. It turns out school hallways are wider than we all remembered! That gave us a base to start from, but Jason, our Creative Director, also had ideas about how we would push the look and feel of the experience even further.

We came up with this comic book direction based on the material provided from United Way. We wanted to keep the stadium realistic, to give the audience a WOW moment when they first put on the headset. Rendering real places like that is a strength of VR as a medium, so why not use it? But the comic book look was a way of differentiating the areas described by the player’s stories. We hoped that going through the portals into these stories would look so different from the stadium that the audience would be blown away! We also knew we could pull off a comic book look by simplifying 3D models, color blocking them and modifying tools like Toony Colors Pro 2. We tried other, more experimental ideas like rendering characters with halftone dots or with moving, overlaid textures. Very few of those ideas made their way into the final experience though. Trying to achieve effects that match the look of 2D drawings in a 3D VR environment brought about complications we didn’t anticipate. Ultimately, we didn’t have time to work through, but that effort helped push our knowledge of the medium and led to other ideas, like dimming the world when the audience makes a choice in the stories.

This project offered Futurus the opportunity to work with volumetric 3D capture footage from Georgia State University’s Creative Media Industries Insititute. It was Experience Developer, Peter Stolmeier’s job to realistically render a digital version of Jerry Rice. He also assisted with integrating animations of our characters. Here’s his take –

One of the challenges virtual reality must overcome is making realistic-looking people. Eventually, as technology improves, fully synthetic avatars will cross the uncanny valley enough to be authentic but until then, volumetric capture can add a convincing 3D video of an actual person and their actions.

By using multiple cameras to film a subject from every angle, high-end photogrammetry software can approximate the mass then stitch together all the 2D footage into one 3D object and texture. After swapping texture and mesh in every frame, full-motion characters like Jerry Rice, look and behave realistically as if they were being video-recorded. But unlike 2D video, cutting footage between takes causes the digital Jerry to look like a glitch because movements do not match up, so each scripted line must be perfectly performed.

When we decided to make an interactive story, we knew it was going to take a lot of custom animations. Rather than using stock files that would approximate what we wanted, we decided to perform them ourselves. We chose to utilize the Perception Neuron suit for motion tracking. It had all the articulation points we needed for this story and the battery pack made it possible to capture wirelessly with long-range. After securing a big enough space, we marked out the floor and had actors play out all the characters in 1:1 scale. The results were great; even though we had only one suit to work with, we were able to get unique, interactive animations for the teachers, students, and bullies in “Call the Play.”

Most of the custom animations of the 3D characters couldn’t have been portrayed better than our Experience Developer, Chan Grant. He further explains how he did it with the motion capture suit –

The Perception Neuron mocap suit was a very useful tool for capturing animations and movements.

The setup was a bit of a hassle, but with the help of others, it took me five to ten minutes to get in and out of the suit. The suit has an amazing range – in terms of both the distance I could move and the movements that could be tracked, which is impressive considering the lack of external cameras tracking my movements. The suit must be tightly strapped to prevent sensors from moving or sliding, making it uncomfortable to get in certain poses. The Perception Neuron was also surprisingly sensitive. I must be careful with moving or bending so that the animation looked consistently natural and accurate.

When recording animations, the process is to, first, zero out the position and calibrate the device. As time progressed, the object would gradually lose orientation and would need to be reset. Next, I would move to the starting location of the character. I designed my physical movements to match the basic patterns that I expect the characters on screen to move, paying closest attention to walking distance. If a character had to walk five meters, turn left and walk two meters, I mimicked that behavior. Finally, the animation is reviewed, critiqued, and reset if needed.

I have never acted on film or a stage before, but my experience on the set of this project is the closest I’ve experienced. Jason Drakeford and Peter Stolmeier gave me direction, provided feedback and offered support. Paul Welch, Experience Developer, was also our cameraman, monitoring the motions that we were creating in the software. Talk about star treatment – I didn’t even need to get my own drinks! My team provided them for me as I was moving along the set! I could get used to that.

Thank you to the entire project team!

Without the efforts and teamwork of everyone involved in this project with me, “Call the Play” is just another idea. I want to extend my appreciation for the following individuals who also worked on this project outside of the Futurus development of this immersive story:

Futurus

Annie Eaton – Executive Producer

Amy Stout – Project Manager

Jamie Lance – Logistics

United Way of Greater Atlanta

Liz Ward – Executive Producer

Sara Fleeman – Producer

Catherine Owens – Producer

Joe Bevill – Art Director

Chad Parker – Media Director

Hannah Tweel – Social Director

Bradley Roberts – Writer

Georgia State University Creative Media Industries Institute

Candice Alger – Executive Producer of Volumetric

James C. Martin – Volumetric Director

Joel Austin Mack – Volumetric Engineer

Quinn Randel – Videographer

Derek Jackson – Audio Engineer

Lisa Ferrel – Associate Producer